- ZeroBlockers

- Posts

- The Weekly Product Review: Bridging Team Autonomy and Product Success

The Weekly Product Review: Bridging Team Autonomy and Product Success

Empowering teams requires trust. Stream Teams are given the autonomy to achieve the product objectives they’ve been set, and they are accountable for outcomes rather than just outputs. However, autonomy does not mean operating in isolation - effective product development requires ongoing monitoring to ensure teams are driving meaningful impact. This is where the Weekly Product Review (WPR) comes in.

Amazon has a regular meeting called a Weekly Business Review. The goal is for senior leadership to “dive deep” and discuss all of the key product metrics for the core products in a business unit.

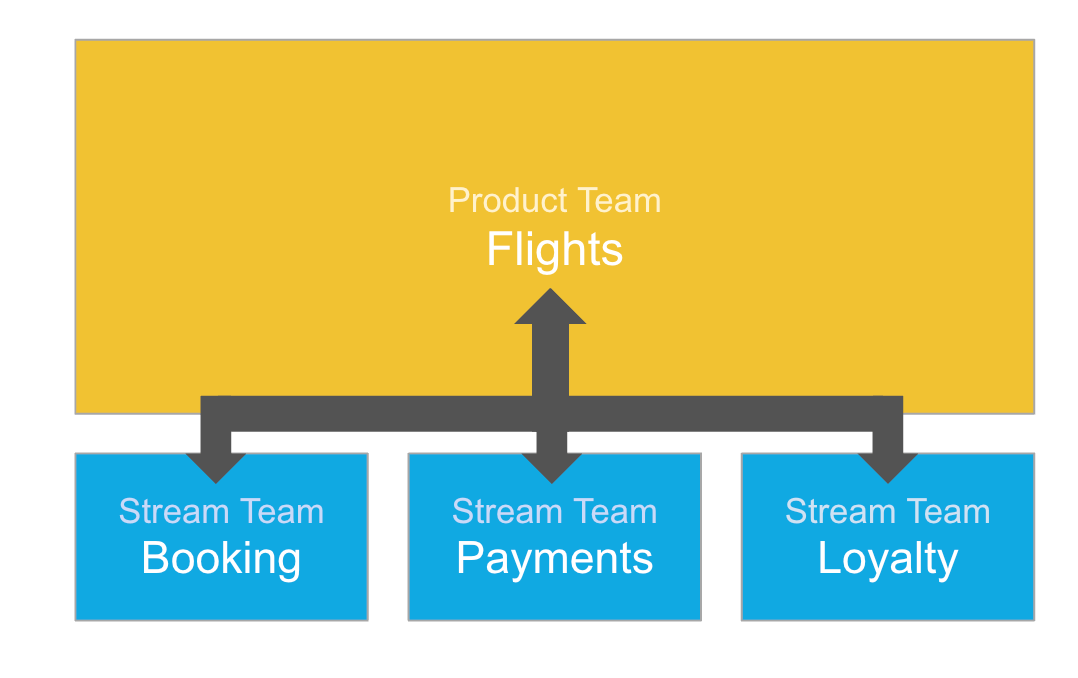

The Weekly Product Review, is a similar meeting but the focus is on a single product, with the audience being the Product Team and all of the Stream Teams working on that product. A lot of work has been put into making Stream Teams autonomous, so bringing them together each week might seem counter-productive, however by focusing on the key product metrics it provides all of the teams with clear visibility on how the product is performing and how they can contribute.

The WPR Audience

Goals of a WPR

The WPR serves as a structured forum for teams to evaluate performance, identify emerging challenges, and refine their approach based on real-world data. The primary objectives of the WPR are to:

Identify key trends and patterns in product metrics.

Spot emerging issues before they become major problems.

Ensure alignment between Stream Teams and the overarching product strategy.

Improve decision-making by basing it on real data rather than assumptions.

Prepare for higher-level discussions, such as the Weekly Business Review (WBR), where senior leadership evaluates the whole business performance.

The Product Metrics Deck

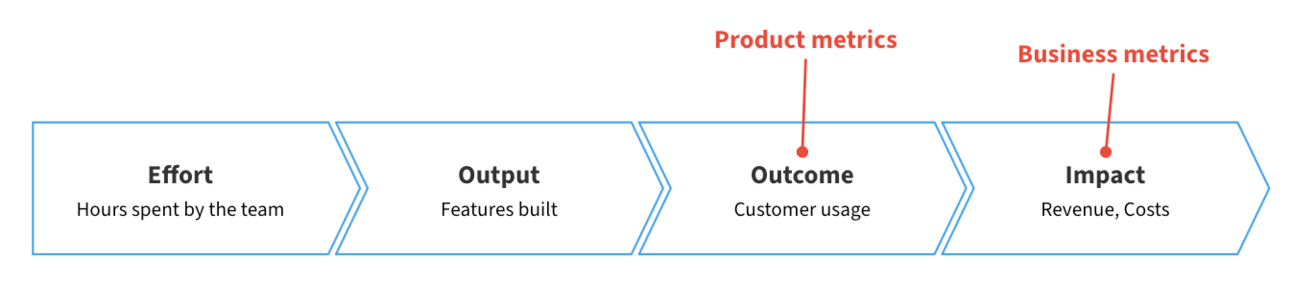

A critical component of the WPR is the Product Metrics Deck, which provides a clear, structured view of how the product is performing. The deck should contain a mix of product metrics (outcomes) and business metrics (impacts).

When a Stream Team is created, it is assigned core product metrics, such as usage statistics, feature adoption rates, or system performance, that it‘s success will be judged against. The Product Team set these initial product metrics for the Stream Teams when the team is initially funded. While the Product Team puts a lot of effort into identifying the right product metrics for each Stream Team, they are ultimately assumptions of what will drive business success. Given that these are assumptions, they must be continuously evaluated to ensure that the product metrics really do align with the business metrics. The WPR provides the opportunity to identify variances and if a product metric is not strongly correlated with meaningful business impacts, it should be refined or replaced.

Charts and Metrics

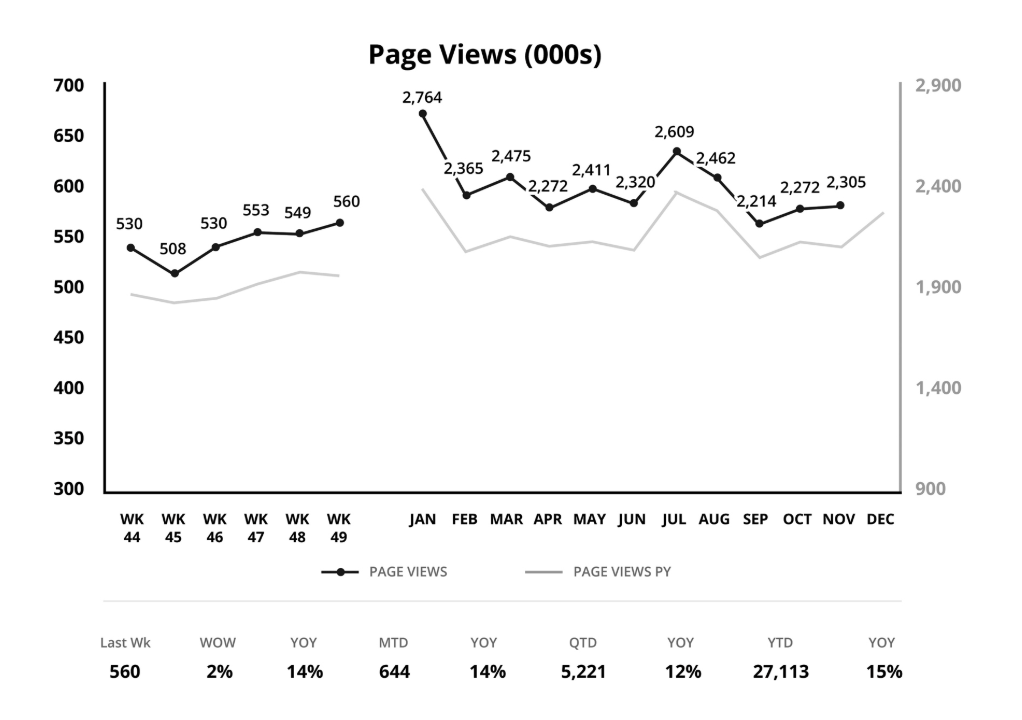

To provide meaningful insights, the deck should present data in consistent, easy-to-interpret visualisations. In the book, Working Backwards, Bill Carr and Colin Bryar, give a great deep dive on how they create charts for the Weekly Business Review at Amazon.

Source: Working Backwards, Bill Carr and Colin Bryar (2021)

Comparing trends over multiple timeframes: Using both short-term (trailing 6 weeks) and long-term (trailing 12 months) views helps surface patterns that might otherwise be missed.

Year over Year comparison: Comparing with different years helps to remove seasonal fluctuation variations

Additional Key Data Points: A small table underneath the chart calls out key datapoints (Week to Date, Month to Date, Quarter to Date, Year to Date etc.) and their percentage change versus the same period last year.

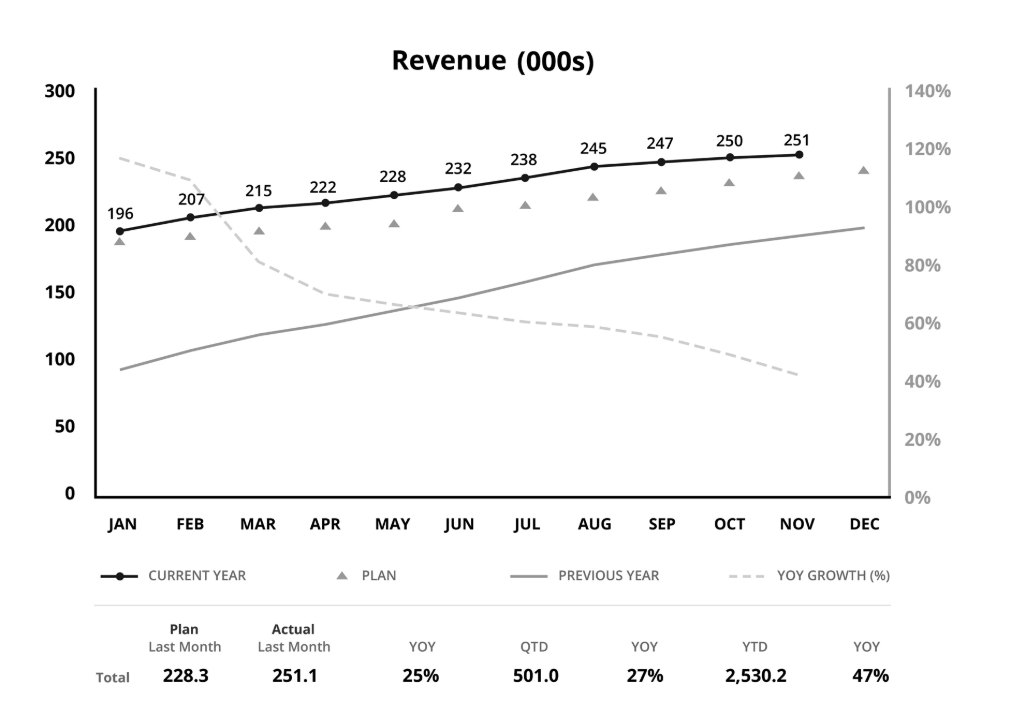

A final metric that is often added to charts is Year-on-Year Growth Rate. It can be hard to determine small variations in an absolute value over time. The growth rate makes it much easier to spot trends before they become big problems.

Source: Working Backwards, Bill Carr and Colin Bryar (2021)

Anecdotes

Data tells us what is happening, but not why. When preparing for a Weekly Product Review the Stream Teams should review the data and identify any anomalies or poor performing metrics. The Product Team are going to want to know what is causing the problem, so the Stream Team needs to build a narrative around the data.

One of the best options is to copy direct customer quotes from the customer interviews to the Product Metrics Deck, if relevant to the data. This provides a direct link between the data and the customer experience.

In other cases, the Stream Team may need to do some additional analysis to understand the root cause of the problem. In Working Backwards, they give the example of negative Contributing Profit (CP) products. Every product could turn negative for different reasons, whether the incorrect size and weight information were entered into the system resulting in shipping costs being higher than expected, an over-purchase of products that leads to a discounting strategy, or bulky products that are expensive to ship. The data point is the same for each of these products, but the root causes, and the potential solutions, are different.

Running an Effective Weekly Product Review

The WPR is an incredibly expensive meeting. This means that there needs to be a heavy focus on efficiency and driving a high-impact meeting rather than just another status update.

As mentioned above, Stream Teams should come into the meetings prepared as they will be expected to provide a concise explanation for variances against expectations. To streamline the running of the meeting each Stream Team should have a single person selected to represent the team. It’s a waste of everybody’s time if the person is not prepared to answer the questions that may arise.

The session should follow these best practices:

Follow the deck: Cover metrics in a consistent order, ensuring discussions remain efficient and predictable.

Focus on signals, not noise: Don’t get lost in small fluctuations, track meaningful trends and correlations.

Focus on the problem, not the person: Teams should expect questions that query why decisions were made and how situations managed to occur. However the questions should focus on understanding so that solutions can be identified rather than attributing blame. People need to be able to admit mistakes rather than try to cover them up.

Keep the focus on metrics: The WPR is not a forum for strategic discussions or development status updates. It should strictly focus on data and performance trends.

Quick handovers: People should be ready to jump in to answer questions when their metrics are being reviewed.

Ensure follow-up actions are clearly assigned: Every meeting should result in actionable tasks, with owners accountable for driving resolution.

The Product Team will be attending a Weekly Business Review with the senior management shortly after the WPR. The same metrics will be reviewed, and they know they are going to be quizzed on all of the variations, so they need to make sure they are comfortable with the root causes and potential solutions.

Maintaining Focus

Over time, new metrics may be identified and added to the deck. If not managed, this bloat can extend the meeting time without providing real benefit. Every metric should be periodically reviewed to ensure that it is driving real decisions, and not just a filler.

Conclusion

The Weekly Product Review serves as a crucial bridge between autonomous Stream Teams and overall product success. The WPR's effectiveness stems from its focus on data-driven decision making, clear ownership of metrics, and the space it creates for honest discussion of challenges and opportunities. By maintaining a consistent cadence of metric review and improvement, teams can spot trends early, make informed decisions, and drive meaningful impact for their customers and business.

For product teams considering implementing or improving their WPR process, remember that it's not about perfect execution from day one. Start with a core set of meaningful metrics, establish a culture of constructive discussion, and continuously refine both the metrics and the process itself. The goal is to create a sustainable practice that drives real improvement while respecting the autonomy of your Stream Teams.

As your WPR practice matures, you'll find it becomes more than just a meeting - it becomes a cornerstone of your product development culture, driving alignment, fostering collaboration, and ultimately delivering better outcomes for your customers.